Projects

A diverse set of projects targeting explainable medical AI

To reach the KEMAI research goals, we have put together a set of diverse PhD projects in the areas of computer science, medicine, ethics and philosophy. The following outlines are examples of such projects. We expect that KEMAI doctoral researchers work on one of these projects, or an alternative project suggested by themselves, which fits under the KEMAI umbrella.

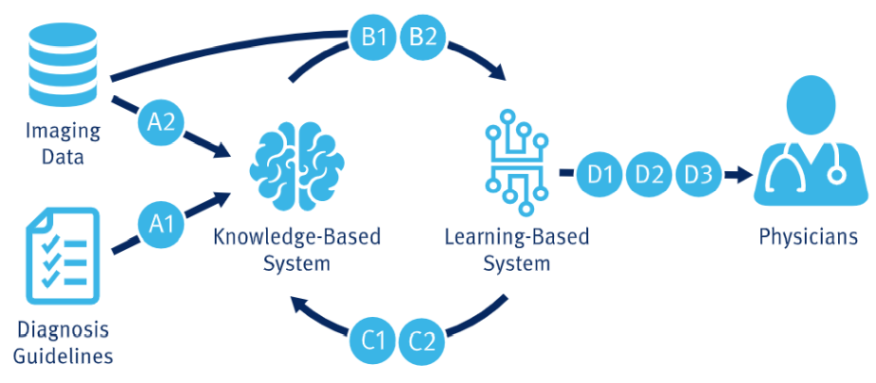

Below is a list of our project proposals, organized by research topic area. These areas of focus include Data Exploitation, which involves harvesting medical guidelines and investigating contrastive pre-training; Knowledge Infusion, which enriches learning models with medical guidelines; Knowledge Extraction, which derives medical guidelines from trained models; and Model Explanation, which communicates the decision process of trained models. Each project is designed to advance our understanding and application of these key areas, contributing to the overall improvement of medical diagnostics and treatments through cutting-edge research and technology.

The following figure shows an overview of how the described projects contribute towards the overall KEMAI mission, which is detailed on the KEMAI mission page.

Data Exploitation

-

A1 – Harvesting Medical Guidelines using Pre-trained Language Models

Project Leads: Prof. Scherp (Computer Science), Prof. Braun (Computer Science), Dr. Vernikouskaya (Medicine)

This project focuses on researching multimodal pre-trained language models (LM) that extract symbolic knowledge on medical diagnosis and treatments from input documents. The models will incorporate structured knowledge and represent extracted information using an extended process ontology. The project applies these models to COVID-19 related imaging and treatments, contributing to OpenClinical’s COVID-19 Knowledge reference model and adapting to various benchmarks. -

A2 – Stability Improved Learning with External Knowledge through Contrastive Pre-training

Project Leads: Jun.-Prof. Götz (Medicine), Prof. Scherp (Computer Science)

This project aims to improve machine learning reliability in small data settings by learning from disconnected datasets using contrastive learning. It investigates if contrastive learning can reduce classifier susceptibility to confounders, reverse confounding effects, and identify out-of-distribution test samples. The project seeks to find approaches that address these technical challenges.

Knowledge Infusion

-

B1 – Neuro-Symbolic Integration with Information Constraints (already assigned)

Project Leads: Prof. Braun (Computer Science), Prof. A. Beer (Medicine)

This project designs neuro-symbolic decision networks for bronchial carcinoma PET/CT imaging, integrating symbolic knowledge and neural networks. It involves optimizing network structures and information flow to improve classification procedures by including patient and guideline information. The project also tests these networks in a meta-learning setting for retraining on novel problems. -

B2 – Semantic Design Patterns for High-Dimensional Diagnostics

Project Leads: Prof. Kestler (Medicine), Prof. M. Beer (Medicine)

This project defines semantic design patterns for incorporating SemDK in ML algorithms to improve clinical predictions and tumor characterization. The patterns will be categorized by their mechanisms and knowledge representation, providing guidelines for application. The project evaluates these patterns in image analysis and molecular diagnostics based on high-dimensional data.

Knowledge Extraction

-

C1 – Enriching Ontological Knowledge Bases through Abduction (already assigned)

Project Leads: Prof. Glimm (Computer Science), Prof. Kestler (Medicine)

This project uses abductive reasoning based on ontological knowledge to explain ML system outcomes. It focuses on developing practical abduction algorithms for consequence-based procedures, extending the deductive reasoner ELK. The research aims to explicate and exploit hidden knowledge in learned systems, benefiting related projects by providing semantic domain knowledge. -

C2 – Learning Search and Decision Mechanisms in Medical Diagnoses

Project Leads: Prof. Neumann (Computer Science), Jun.-Prof. Götz (Medicine)

This project studies human attentive search and object attention principles for vision-based medical diagnosis. It investigates mechanisms of object-based attention and visual routines for task execution. The goal is to formalize human visual search strategies and integrate them into deep neural networks (DNNs) for improved medical diagnosis.

Model Explanation

-

D1 – Accountability of AI-based Medical Diagnoses (already assigned)

Project Leads: Prof. Steger (Ethics), Prof. Ropinski (Computer Science)

This project addresses the ethical analysis of AI system designs for medical diagnoses. It focuses on determining which AI-supported processes need to be explainable and transparent, generating comprehensive information to help users understand AI-driven medical decisions. -

D2 – Explainability, Understanding, and Acceptance Requirements

Project Leads: Prof. Hufendiek (Philosophy), Prof. Glimm (Computer Science), Dr. Lisson (Medicine)

This project applies philosophical insights on understanding and explanations to the use of AI in medical diagnosis. It clarifies the roles of understanding and abductive reasoning in medical diagnosis, identifies conflicts between stakeholders, and suggests ways to develop and integrate AI explanations with human experts' reasoning processes. -

**D3 – Guideline-Based Explainable Visualizations **

Project Leads: Prof. Ropinski (Computer Science), Prof. Hufendiek (Philosophy), Dr. Lisson (Medicine)

This project develops visualization-based explainability techniques for AI-based medical detection and diagnosis. It targets decisions based on individual cases, using medical diagnosis guidelines and classification systems. The project derives visualization primitives from diagnostic features and develops XAI techniques that make AI decisions as transparent as those of radiologists.

Medical PhD Projects (10 months, MD)

The outlined PhD projects are complemented by medical PhD projects, which complement the technical and ethical projects, and which are targeted towards medical researchers. The medical PhD projects can be supervised by one of the KEMAI medical partners. In this area, we forsee the following projects:

M1 – Guideline Analysis

This project focuses on systematically analyzing medical guidelines for their integration into AI systems aimed at improving medical diagnostics. The doctoral researcher will assess guidelines, extract relevant rules, and evaluate their impact on AI models with medical practitioners.

M2 – Explanation Ranking

This project aims to evaluate and rank explanation techniques for AI-supported medical diagnoses. The doctoral researcher will develop a taxonomy of explanations and conduct evaluations with medical practitioners to determine their effectiveness.

M3 – Explanation Requirements for COVID-19 CT Imaging

This project focuses on the requirements for explainable AI in COVID-19 CT imaging. The doctoral researcher will analyze the diagnostic process, conduct expert interviews, and propose guidelines for integrating explainable AI.

M4 – Explanation Requirements for Bronchial Carcinoma PET/CT Imaging

Similar to M3, this project addresses the requirements for explainable AI in bronchial carcinoma PET/CT imaging. The research will follow a similar protocol but consider the dual-modality nature of the diagnosis.

M5 – Explanation Requirements for Echinococcosis PET/CT Imaging

Complementing M3 and M4, this project analyzes the requirements for explainable AI in echinococcosis PET/CT imaging. The research will follow a similar protocol, accounting for the rarity and sparse data of the disease.

M6 – LLM Explanation Evaluation

This project investigates the use of large language models (LLMs) to support explainable AI in medical diagnosis. The doctoral researcher will experiment with LLMs, analyze their outputs with medical experts, and identify requirements for future research to enhance their utility.

KEMAI - Research Training Group on Medical XAI

KEMAI - Research Training Group on Medical XAI